15 KiB

Apps (Nextcloud, Peertube...)

In this section, we cover the following web applications:

| Name | Status | Note |

|---|---|---|

| Nextcloud | ✅ | Both Primary Storage and External Storage are supported |

| Peertube | ✅ | Must be configured with the website endpoint |

| Mastodon | ❓ | Not yet tested |

| Matrix | ✅ | Tested with synapse-s3-storage-provider |

| Pixelfed | ❓ | Not yet tested |

| Pleroma | ❓ | Not yet tested |

| Lemmy | ❓ | Not yet tested |

| Funkwhale | ❓ | Not yet tested |

| Misskey | ❓ | Not yet tested |

| Prismo | ❓ | Not yet tested |

| Owncloud OCIS | ❓ | Not yet tested |

Nextcloud

Nextcloud is a popular file synchronisation and backup service. By default, Nextcloud stores its data on the local filesystem. If you want to expand your storage to aggregate multiple servers, Garage is the way to go.

A S3 backend can be configured in two ways on Nextcloud, either as Primary Storage or as an External Storage. Primary storage will store all your data on S3, in an opaque manner, and will provide the best performances. External storage enable you to select which data will be stored on S3, your file hierarchy will be preserved in S3, but it might be slower.

In the following, we cover both methods but before reading our guide, we suppose you have done some preliminary steps. First, we expect you have an already installed and configured Nextcloud instance. Second, we suppose you have created a key and a bucket.

As a reminder, you can create a key for your nextcloud instance as follow:

garage key new --name nextcloud-key

Keep the Key ID and the Secret key in a pad, they will be needed later.

Then you can create a bucket and give read/write rights to your key on this bucket with:

garage bucket create nextcloud

garage bucket allow nextcloud --read --write --key nextcloud-key

Primary Storage

Now edit your Nextcloud configuration file to enable object storage.

On my installation, the config. file is located at the following path: /var/www/nextcloud/config/config.php.

We will add a new root key to the $CONFIG dictionnary named objectstore:

<?php

$CONFIG = array(

/* your existing configuration */

'objectstore' => [

'class' => '\\OC\\Files\\ObjectStore\\S3',

'arguments' => [

'bucket' => 'nextcloud', // Your bucket name, must be created before

'autocreate' => false, // Garage does not support autocreate

'key' => 'xxxxxxxxx', // The Key ID generated previously

'secret' => 'xxxxxxxxx', // The Secret key generated previously

'hostname' => '127.0.0.1', // Can also be a domain name, eg. garage.example.com

'port' => 3900, // Put your reverse proxy port or your S3 API port

'use_ssl' => false, // Set it to true if you have a TLS enabled reverse proxy

'region' => 'garage', // Garage has only one region named "garage"

'use_path_style' => true // Garage supports only path style, must be set to true

],

],

That's all, your Nextcloud will store all your data to S3. To test your new configuration, just reload your Nextcloud webpage and start sending data.

External link: Nextcloud Documentation > Primary Storage

External Storage

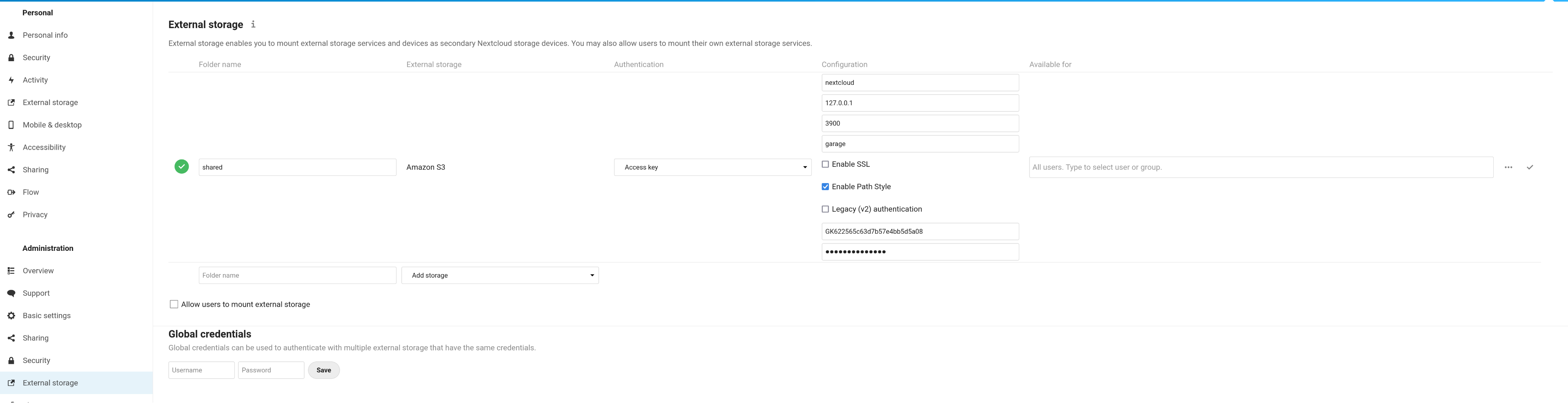

From the GUI. Activate the "External storage support" app from the "Applications" page (click on your account icon on the top right corner of your screen to display the menu). Go to your parameters page (also located below your account icon). Click on external storage (or the corresponding translation in your language).

Add a new external storage. Put what you want in "folder name" (eg. "shared"). Select "Amazon S3". Keep "Access Key" for the Authentication field. In Configuration, put your bucket name (eg. nextcloud), the host (eg. 127.0.0.1), the port (eg. 3900 or 443), the region (garage). Tick the SSL box if you have put an HTTPS proxy in front of garage. You must tick the "Path access" box and you must leave the "Legacy authentication (v2)" box empty. Put your Key ID (eg. GK...) and your Secret Key in the last two input boxes. Finally click on the tick symbol on the right of your screen.

Now go to your "Files" app and a new "linked folder" has appeared with the name you chose earlier (eg. "shared").

External link: Nextcloud Documentation > External Storage Configuration GUI

From the CLI. First install the external storage application:

php occ app:install files_external

Then add a new mount point with:

php occ files_external:create \

-c bucket=nextcloud \

-c hostname=127.0.0.1 \

-c port=3900 \

-c region=garage \

-c use_ssl=false \

-c use_path_style=true \

-c legacy_auth=false \

-c key=GKxxxx \

-c secret=xxxx \

shared amazons3 amazons3::accesskey

Adapt the hostname, port, use_ssl, key, and secret entries to your configuration.

Do not change the use_path_style and legacy_auth entries, other configurations are not supported.

External link: Nextcloud Documentation > occ command > files external

Peertube

Peertube proposes a clever integration of S3 by directly exposing its endpoint instead of proxifying requests through the application. In other words, Peertube is only responsible of the "control plane" and offload the "data plane" to Garage. In return, this system is a bit harder to configure. We show how it is still possible to configure Garage with Peertube, allowing you to spread the load and the bandwidth usage on the Garage cluster.

Create resources in Garage

Create a key for Peertube:

garage key new --name peertube-key

Keep the Key ID and the Secret key in a pad, they will be needed later.

We need two buckets, one for normal videos (named peertube-video) and one for webtorrent videos (named peertube-playlist).

garage bucket create peertube-video

garage bucket create peertube-playlist

Now we allow our key to read and write on these buckets:

garage bucket allow peertube-playlists --read --write --owner --key peertube-key

garage bucket allow peertube-videos --read --write --owner --key peertube-key

We also need to expose these buckets publicly to serve their content to users:

garage bucket website --allow peertube-playlists

garage bucket website --allow peertube-videos

Finally, we must allow Cross-Origin Resource Sharing (CORS). CORS are required by your browser to allow requests triggered from the peertube website (eg. peertube.tld) to your bucket's domain (eg. peertube-videos.web.garage.tld)

export CORS='{"CORSRules":[{"AllowedHeaders":["*"],"AllowedMethods":["GET"],"AllowedOrigins":["*"]}]}'

aws --endpoint http://s3.garage.localhost s3api put-bucket-cors --bucket peertube-playlists --cors-configuration $CORS

aws --endpoint http://s3.garage.localhost s3api put-bucket-cors --bucket peertube-videos --cors-configuration $CORS

These buckets are now accessible on the web port (by default 3902) with the following URL: http://<bucket><root_domain>:<web_port> where the root domain is defined in your configuration file (by default .web.garage). So we have currently the following URLs:

Make sure you (will) have a corresponding DNS entry for them.

Configure Peertube

You must edit the file named config/production.yaml, we are only modifying the root key named object_storage:

object_storage:

enabled: true

# Put localhost only if you have a garage instance running on that node

endpoint: 'http://localhost:3900' # or "garage.example.com" if you have TLS on port 443

# Garage supports only one region for now, named garage

region: 'garage'

credentials:

access_key_id: 'GKxxxx'

secret_access_key: 'xxxx'

max_upload_part: 2GB

streaming_playlists:

bucket_name: 'peertube-playlist'

# Keep it empty for our example

prefix: ''

# You must fill this field to make Peertube use our reverse proxy/website logic

base_url: 'http://peertube-playlists.web.garage.localhost' # Example: 'https://mirror.example.com'

# Same settings but for webtorrent videos

videos:

bucket_name: 'peertube-video'

prefix: ''

# You must fill this field to make Peertube use our reverse proxy/website logic

base_url: 'http://peertube-videos.web.garage.localhost'

That's all

Everything must be configured now, simply restart Peertube and try to upload a video.

Peertube will start by serving the video from its own domain while it is encoding. Once the encoding is done, the video is uploaded to Garage. You can now reload the page and see in your browser console that data are fetched directly from your bucket.

External link: Peertube Documentation > Remote Storage

Mastodon

https://docs.joinmastodon.org/admin/config/#cdn

Matrix

Matrix is a chat communication protocol. Its main stable server implementation, Synapse, provides a module to store media on a S3 backend. Additionally, a server independent media store supporting S3 has been developped by the community, it has been made possible thanks to how the matrix API has been designed and will work with implementations like Conduit, Dendrite, etc.

synapse-s3-storage-provider (synapse only)

Supposing you have a working synapse installation, you can add the module with pip:

pip3 install --user git+https://github.com/matrix-org/synapse-s3-storage-provider.git

Now create a bucket and a key for your matrix instance (note your Key ID and Secret Key somewhere, they will be needed later):

garage key new --name matrix-key

garage bucket create matrix

garage bucket allow matrix --read --write --key matrix-key

Then you must edit your server configuration (eg. /etc/matrix-synapse/homeserver.yaml) and add the media_storage_providers root key:

media_storage_providers:

- module: s3_storage_provider.S3StorageProviderBackend

store_local: True # do we want to store on S3 media created by our users?

store_remote: True # do we want to store on S3 media created

# by users of others servers federated to ours?

store_synchronous: True # do we want to wait that the file has been written before returning?

config:

bucket: matrix # the name of our bucket, we chose matrix earlier

region_name: garage # only "garage" is supported for the region field

endpoint_url: http://localhost:3900 # the path to the S3 endpoint

access_key_id: "GKxxx" # your Key ID

secret_access_key: "xxxx" # your Secret Key

Note that uploaded media will also be stored locally and this behavior can not be deactivated, it is even required for some operations like resizing images. In fact, your local filesysem is considered as a cache but without any automated way to garbage collect it.

We can build our garbage collector with s3_media_upload, a tool provided with the module.

If you installed the module with the command provided before, you should be able to bring it in your path:

PATH=$HOME/.local/bin/:$PATH

command -v s3_media_upload

Now we can write a simple script (eg ~/.local/bin/matrix-cache-gc):

#!/bin/bash

## CONFIGURATION ##

AWS_ACCESS_KEY_ID=GKxxx

AWS_SECRET_ACCESS_KEY=xxxx

S3_ENDPOINT=http://localhost:3900

S3_BUCKET=matrix

MEDIA_STORE=/var/lib/matrix-synapse/media

PG_USER=matrix

PG_PASS=xxxx

PG_DB=synapse

PG_HOST=localhost

PG_PORT=5432

## CODE ##

PATH=$HOME/.local/bin/:$PATH

cat > database.yaml <<EOF

user: $PG_USER

password: $PG_PASS

database: $PG_DB

host: $PG_HOST

port: $PG_PORT

EOF

s3_media_upload update-db 1d

s3_media_upload --no-progress check-deleted $MEDIA_STORE

s3_media_upload --no-progress upload $MEDIA_STORE $S3_BUCKET --delete --endpoint-url $S3_ENDPOINT

This script will list all the medias that were not accessed in the 24 hours according to your database. It will check if, in this list, the file still exists in the local media store. For files that are still in the cache, it will upload them to S3 if they are not already present (in case of a crash or an initial synchronisation). Finally, the script will delete these files from the cache.

Make this script executable and check that it works:

chmod +x $HOME/.local/bin/matrix-cache-gc

matrix-cache-gc

Add it to your crontab. Open the editor with:

crontab -e

And add a new line. For example, to run it every 10 minutes:

*/10 * * * * $HOME/.local/bin/matrix-cache-gc

External link: Github > matrix-org/synapse-s3-storage-provider

matrix-media-repo (server independent)

External link: matrix-media-repo Documentation > S3

Pixelfed

Pixelfed Technical Documentation > Configuration

Pleroma

Pleroma Documentation > Pleroma.Uploaders.S3

Lemmy

Lemmy uses pict-rs that supports S3 backends

Funkwhale

Funkwhale Documentation > S3 Storage

Misskey

Misskey Github > commit 9d94424

Prismo

Prismo Gitlab > .env.production.sample

Owncloud Infinite Scale (ocis)

OCIS could be compatible with S3:

Unsupported

- Mobilizon: No S3 integration

- WriteFreely: No S3 integration

- Plume: No S3 integration